At Modash, we're solving influencer marketing problems for some of today's most well-known brands.

One of the key problems faced by our clients is the issue of finding the right social media creators to partner with at scale. For example, when entering a new market it is difficult for a brand to know the influencers that best align with their brand’s identity in that region. But finding the right audience is often make or break.

Modash offers an intuitive discovery tool to solve just that issue, enabling brands to source hundreds and thousands of influencers for their campaigns. To do that effectively, it’s important to understand the two core dimensions of search: the content creators publish and the qualities they represent. In practice, most customer searches combine both.

- Content Search – finding content about a specific topic. Think a sports supplement brand trying to locate posts that specifically feature “creatine use,” such as videos explaining how to take creatine effectively or posts discussing common misconceptions about creatine.

- Creator Search – finding Creators who match a brands values and characteristics. Think an eco-friendly skincare brand that wants to partner with creators who genuinely embody a sustainable lifestyle. Instead of searching for creators who’ve posted about “recycled packaging”, the brand wants creators who live the values: vegan, zero-waste advocates, plant-based lifestyle influencers.

Let’s look into how Modash has approached the two use-cases below.

Content Search

Historically social media discovery tools (also Modash) have relied on textual data to pinpoint key topics creators post about: namely hashtags and descriptions provided with each post.

If you only rely on hashtags and captions/descriptions, you’ll run into an issue: a lot of post captions and hashtags only give complimentary information, or simply aren’t there at all. That means you may not get the intent of the post just by looking at the accompanying text. Thus, via text, a lot of content and creators on social media aren’t searchable.

To address the underlying issue, the field has moved towards a more holistic approach where the actual visual media is incorporated into the search.

Multimodal AI – Search on Visuals

We want our customers to be able to describe in free-form text the type of content they expect to see – similar to Google Image Search.

To do this, we can leverage multimodal machine learning models such as CLIP. These models are trained using an approach called Contrastive Learning (CL), which teaches two models (encoders) - one for text and one for images - to understand and encode their respective inputs in a unified way.

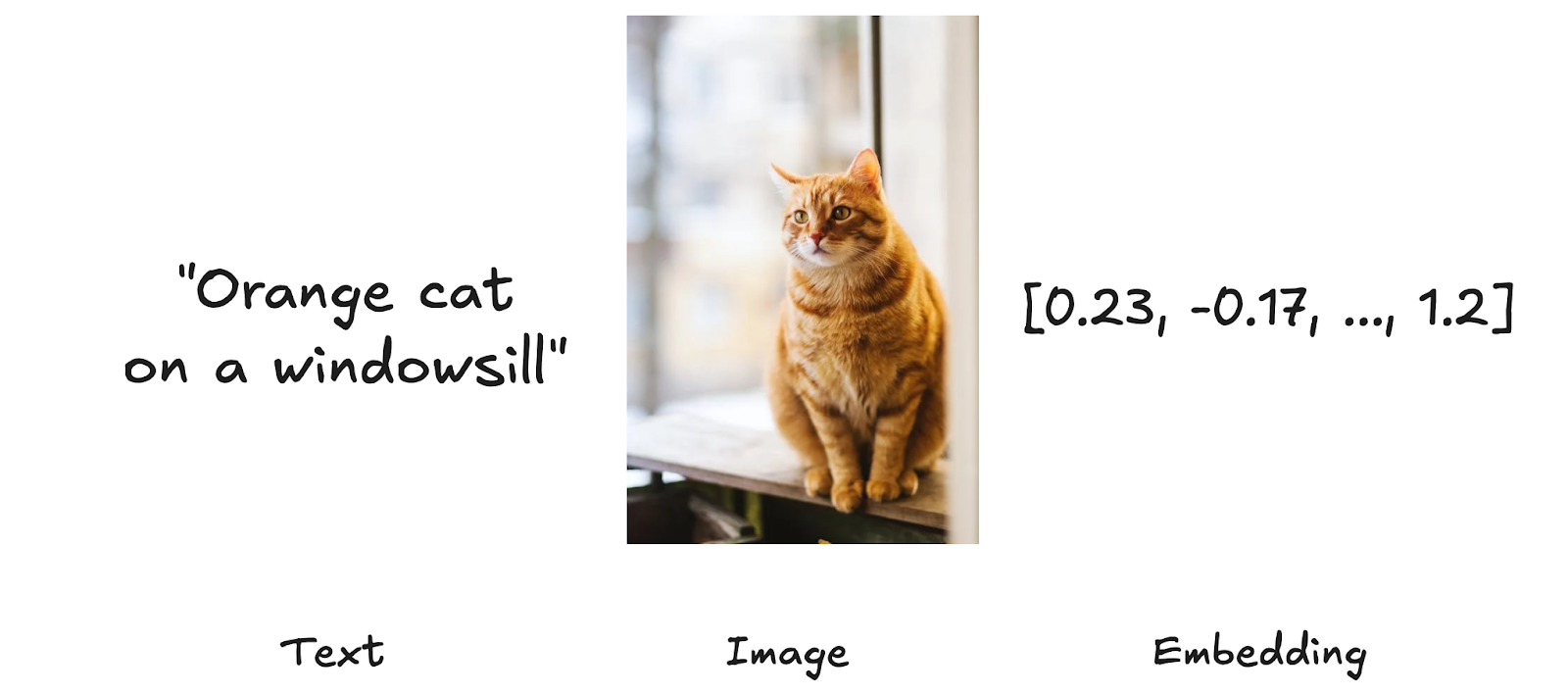

In practice, this means the model can process the word “cat” and an image of a cat, and generate embeddings – numeric vector representations – that capture the same underlying concept. While this kind of association is intuitive for humans, it is a non-trivial task for software systems without built-in contextual understanding of the world. Embeddings are just information like text and images, but only made understandable for ML systems!

Because both encoders produce embeddings in the same shared latent space, the system can directly compare text and visuals. This allows us to search image content using free-form textual descriptions, enabling discovery that goes far beyond hashtags and captions.

In Practice

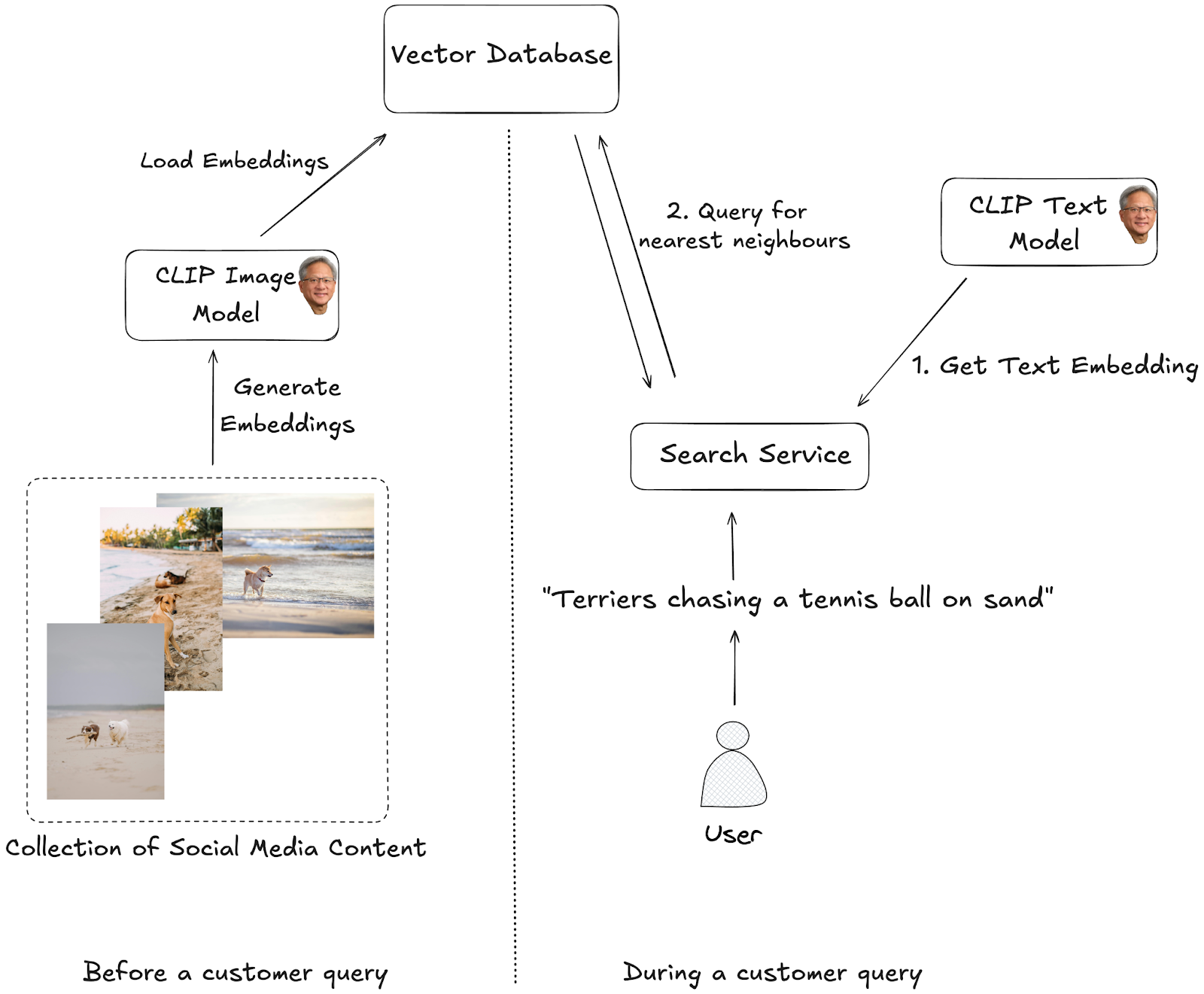

To make a contrastive lookup work at scale, we need to do two things to prepare the system for future customer queries:

- Embed all images and videos with the image encoder from CLIP.

- Index the embeddings in a VectorDB.

Building the embedding indexes is the main crux to being able to retrieve similar neighbours to a query in less than a second. Luckily most of the complexity in creating the indexes is handled by the Vector Databases behind the scenes.

When the indexes are production ready, then every embedding in the database holds references to a few other most similar embeddings. When these references are formed ahead of time, then we can easily retrieve the N most similar posts when a customer sends a query over to us.

With the above two steps completed ahead of time, getting to serving customer requests is simple: In production, when a customer sends a query:

- Embed the query the customer sent with the text encoder from CLIP.

- Run a similarity lookup against the index formed in the previous step 2 to get N closest visual matches to the user query.

There are many great resources on the web going into more details on how exactly vector databases work – e.g. one such from Elastic - but most use some variant of Approximate Nearest Neighbours (ANN) algorithm to efficiently find similar matches.

Incorporating Metadata

While visual search dramatically expands what can be discovered, relying solely on images and video still leaves valuable contextual information unused. Post metadata can enrich the search experience in a few complementary ways.

One option is full-text search, where queries are matched directly against indexed text. This approach is straightforward, but it has a significant drawback: it cannot account for semantic similarity. As a result, users may miss relevant posts that express the same idea using different wording.

A more integrated approach is to use CLIP’s text encoder to convert metadata – such as captions, descriptions, or hashtags – into embeddings and index them alongside the visual embeddings. This places text and visuals into the same representational space, allowing the system to balance both sources of information. Depending on the user’s query, the search can surface results driven primarily by text, primarily by visuals, or a natural blend of both, leading to more consistent and context-aware discovery.

Technical Caveats

GPU Processing

The biggest bottleneck with such a pipeline is the embeddings generation, which is GPU-bound. Processing of billions of images and videos requires many thousands of GPU hours even on the fastest hardware out there. Getting the desired amount of compute on a single cloud provider might not be possible. So iterative scaling and processing might be needed. An alternative could be to consider multiple hosting providers, but that increases the implementation overhead and egress fees that will pile up with the petabytes worth of data to process.

Model Choice

The choice on the model to use is an important one. First, from a capabilities perspective: unless the model is fine-tuned to our needs, then its important to find one that has been trained on a dataset representative of the data seen in production.

Second, choosing between open-source or third party solutions will determine the cost and processing speed. There’s less wiggle room in terms of throughput and cost when going with a third-party model, like the one from Google. Going with an open-source alternative leaves more knobs to tune in terms of speed and cost as the model, underlying infrastructure, and inputs can be configured to your needs.

Luckily there are plenty of very capable open-source CLIP variants available, with public leaderboards like INQUIRE, open_clip also comparing the models across different benchmarks.

Creator Search

While finding individual pieces of content is a well-defined task, it’s a lot trickier to find the right creators. Instead of analysing one post at a time, we need to understand the broader patterns, values, and behaviours that emerge across a creator’s entire profile. Attributes like being a sustainability-focused parent, a fitness-obsessed weekend warrior, or a budget-conscious home cook rarely live in a single post. Instead, they emerge gradually as a pattern in how a creator presents themselves, making search more nuanced.

When Content Alone Misleads

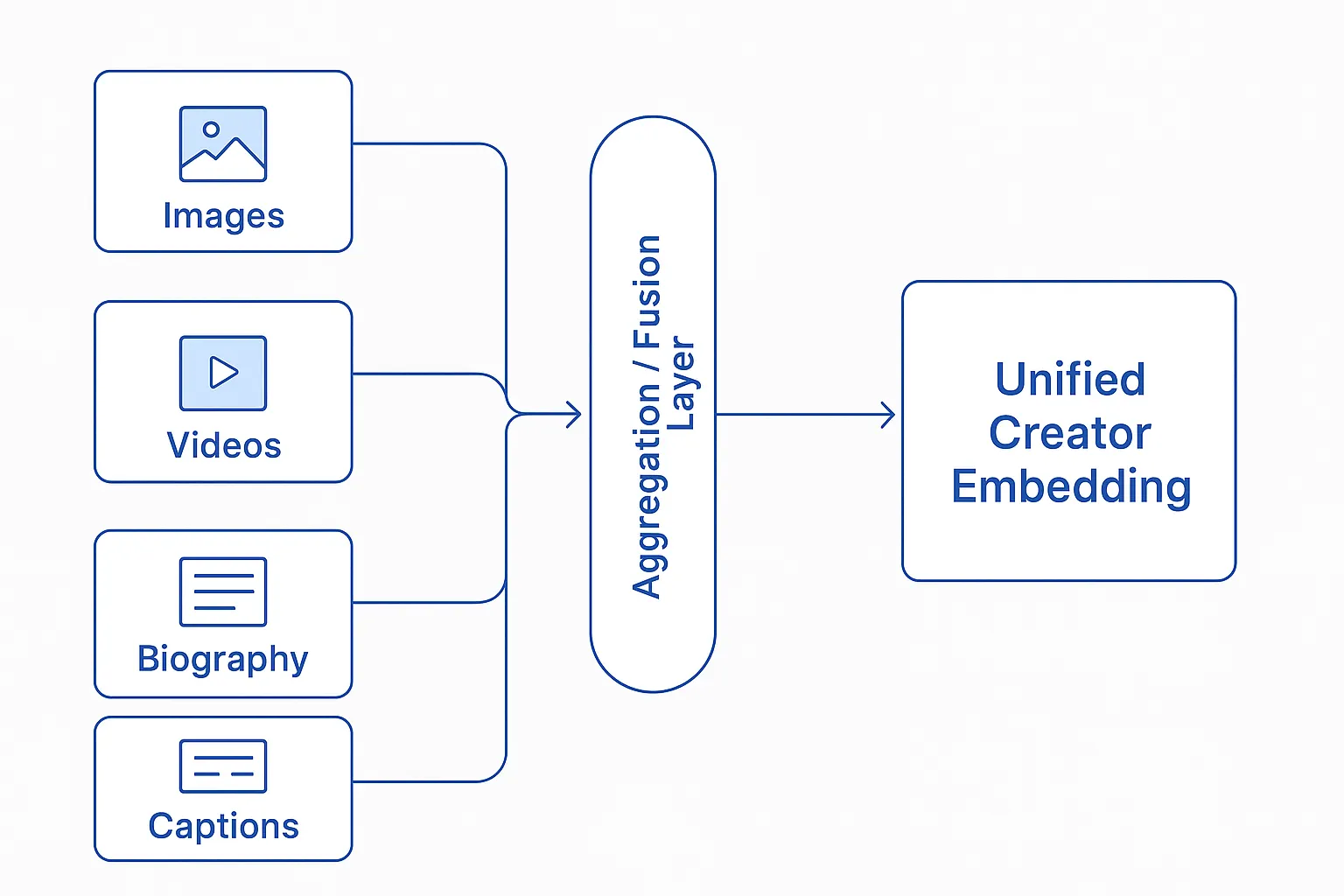

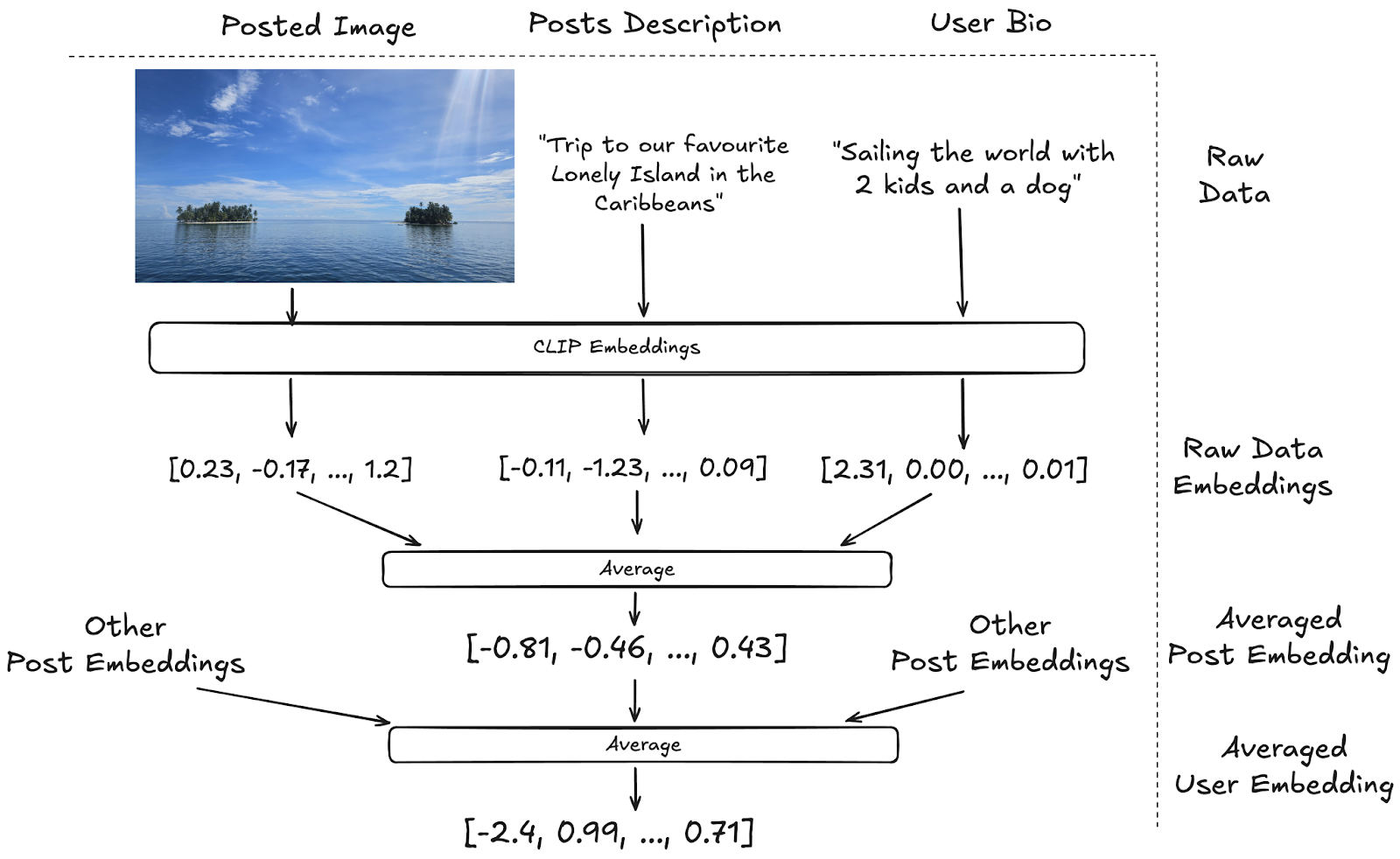

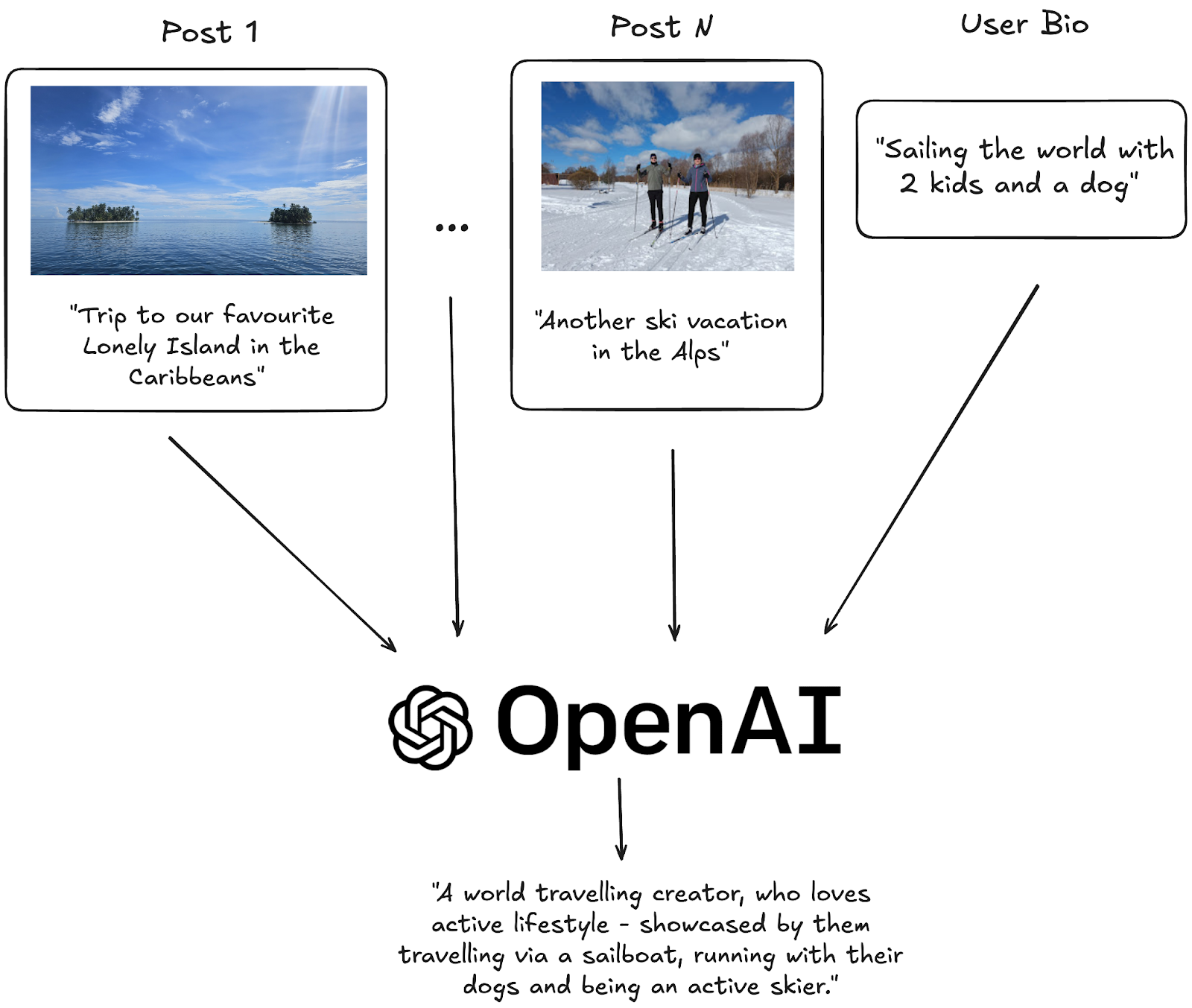

To create that nuanced, full picture of a creator, you’ll have to aggregate information from many different data sources at once: posted content, as well as metadata, such as biography and post descriptions.

Consider a creator whose bio describes them as a professional dietician, while their content explores a range of contrasting diets – vegan, carnivore, keto – and evaluates their impact on performance and measurable health metrics.

If we relied solely on a content-centric approach, this creator might be frequently suggested for vegan food brands simply because some posts include vegan meals. In reality, their audience is far more likely to consist of people interested in personal optimisation, experimentation, and evidence-driven lifestyle choices. Without aggregating the full set of signals, the true nature of the creator – and their audience – would be missed.

A Unified Representation

All these data sources need to be transformed into something that’s actually searchable. In practice, this means converting them into embeddings. Raw text can’t support true semantic search on its own – even with term-expansion methods like SPLADE – so embeddings become the natural way to unify diverse signals.

There is also a key technical limitation to consider. An ANN index is built around a single embedding space, which means it can only store and retrieve neighbours for one vector at a time. Because each embedding field produces its own independent vector space, ANN structures can’t easily be merged, layered, or intersected in a scalable way.

In practical terms – there’s no efficient way to compute a cross-section of multiple ANN queries at a large scale.

As a result, all data sources must be brought together into a single embedding that represents the creator holistically – a challenging task. How do we build such a unified representation?

Aggregate Content Embeddings

One idea is to reuse the embeddings generated for Content Search and combine them – for example, by averaging all image or video embeddings for a profile. Additional text embeddings from bios or post metadata could be included to enrich the signal.

At scale, however, this approach brings two main downsides. First, a creator may have hundreds of posts, and averaging all or even a subset of those embeddings (especially when mixed with metadata) can wash out the distinctive signals that matter.

Second, simply averaging embeddings doesn't actually capture how visual and textual signals relate and reinforce each other.

LLMs for Everything

As LLMs are the answer to everything nowadays, then we could try to leverage them here as well. Vision Language Models (VLMs) could be used to generate textual descriptions of all of the above datapoints at once. The subsequent textual description can be embedded to enable semantic lookups.

The immediate downside is obvious – LLMs are costly. Especially given that vision inputs generate a lot of tokens. We don’t know whether an information-dense textual description is able to retain good retrieval accuracy on everything included once it’s all embedded. Another potential issue is when customers expect to search for visual details not captured by the VLM – meaning translation from image to text will always lose information.

Custom Encoder

Another option is to train a custom encoder tailored to creator-level data. We need to use Contrastive Learning in this case to retain comparisons against customer queries in production. Using CL requires forming a custom dataset of similar and dissimilar pairs for training purposes – doing so will require either costly manual labour, clever heuristics on existing data, or many LLM calls (or all three).

While a custom model should perform the best in theory, it demands much more effort, work, and experimentation than options which leverage existing solutions. Thus, as usual, it’s recommended to first piece together a baseline from those existing solutions, and only then consider a fine-tuned methodology.

All of the highlighted ideas bring their own upsides, but also have significant downsides. Choosing which one to pursue is ultimately up to you.

No matter the approach chosen, we are left with an embedding representation at the end that can be plugged in to a vector database to enable Creator Search.

Conclusion

There are plenty of challenges we’ve solved - and many we’re still exploring. Database optimisations, GPU-efficient processing, and blending multimodal signals at scale all remain active areas of work.

If you're the kind of person who thinks in solutions, here are a few prompts to noodle on:

- How would you build a creator-level embedding that stays signal-rich?

- How would you mix metadata and visuals without relying on expensive VLMs?

- How would you scale vector indexes as the dataset grows rapidly?

- How would you layer complex filters on top of ANN lookups efficiently?

If these questions spark ideas, we’d be excited to hear how you’d approach them. Reach out.

There’s also a great visual overview of Modash’s AI Search in the feature’s launch video - be sure to check it out!