The creator economy is trapped in a unique paradox: while the volume of social data generated by active users continues to explode, the walled gardens have been busy bricking up their gates and accessibility of that data has contracted.

Today, choosing a social data vendor means choosing an architectural philosophy, not just a feature set. Get this wrong, and you'll spend six months building infrastructure instead of product.

Here is a taxonomy of how modern dev teams actually acquire social data, categorizing the market into four distinct architectural approaches:

- The pipeline (Pre-indexed infrastructure): Decoupled collection and data delivery designed for high-scalability discovery (e.g. Modash).

- The shovel (On-demand Collection): Raw extraction tools for dev teams willing to manage and maintain actively (e.g. Apify, Bright Data).

- The walled garden (Official platform APIs): Official interfaces for managing owned assets (e.g. Meta Graph API).

- The consent model (Authenticated gateways): User-permissioned integrations designed for authenticated data (e.g. Phyllo).

Modash API: The pre-indexed data pipeline

Philosophy: "We design, refine, and deliver the engine; you build the car."

Designed for developers who need to ship powerful influencer tools quickly, built on a robust and reliable data infrastructure, Modash API is the foundational layer of the creator economy with two distinct offering for pre-indexed and analyzed data and raw up-to-date data. The defining characteristic of the API architecture is the decoupling of data collection from data delivery. In data engineering, this is often referred to as a "Pipeline Model" applied to external data.

In the pipeline model, the fetching happens asynchronously. Modash continuously updates a proprietary database of over 350 million profiles across Instagram, TikTok, and YouTube. When a developer submits a query, it runs against this optimized, structured index.

- Speed: Queries return in milliseconds, enabling real-time filtering.

- Scale: Developers can analyze demographic data for millions of profiles.

The AI advantage

The pre-indexed nature of the pipeline allows for the application of advanced AI models on top of the data. Because Modash indexes full text of bios, captions, and hashtags, it enables semantic discovery. A brand can search for the concept or vibe of their campaign (e.g., "sustainable living working moms in Paris") and the API can surface creators who fit that narrative, even if they don't explicitly use the keyword "sustainable" in their bio.

The lookalike engine

Another great benefit of the pipeline architecture is the ability to generate lookalike audiences. By analyzing the graph overlap and content similarity of 350M+ profiles, Modash's API can accept a seed profile (e.g., a brand's best-performing influencer) and return a list of similar creators.

Case study: Migration to pipeline

Pietra, a platform that enables creators to launch product lines, faced the challenge of providing social listening and discovery to its users. Attempting to build this via self-collection would have required maintaining infrastructure to scrape millions of posts daily. By integrating the Modash API, Pietra offloaded data acquisition, allowing them to focus on their unique proposition. The Modash API serves as the backbone of Pietra Pulse, providing real-time insights with no additional overhead.

Official APIs: The walled gardens

Philosophy: "You can look, but only at what we own, and only on our terms."

Relying solely on official APIs (Instagram Graph, TikTok Creator Marketplace, YouTube Data) is a lesson in compromise. These official APIs are primarily designed to publish content and retrieve analytics for the authenticated user's account. That’s why they are architecturally averse to discovery. For example, Instagram limits hashtag searches to 30 unique queries per week, a constraint that makes market monitoring at scale impossible.

Instagram Graph API

- Pros: Great for campaign tracking. Once a creator grants access, you get granular metrics and demographics.

- Cons: It is a walled garden. The business discovery endpoint lets you query specific accounts, but it is not suitable for a broad keyword search.

TikTok API for Business

- Pros: Great for understanding demographics. It offers user age/gender distributions (not inferred) directly from the source.

- Cons: High barrier to entry. If you aren't a certified partner, you are stuck with the limited API or manual vetting.

YouTube Data API v3

- Pros: The only API that allows decent discovery. You can search content by keyword and collect view counts without creator permissions.

- Cons: Quota costs. Deep auditing (audience retention, detailed geo) still requires an authenticated token from the creator.

On-demand scrapers (Bright Data, Apify): The shovel

Philosophy: "Here's a shovel, a helmet, and a waiver. Good luck."

When official APIs aren’t enough, developers often turn to the shovel approach, building their own scrapers. This decision often leads to engineering debt, where the cost of maintaining the tool eventually eclipses the value of the data it retrieves.

Bright Data

Built mainly to aid access, Bright Data offers a network of proxies to make scraping easier. The main utility lies in the automatic handling of countermeasures.

- Pros: Reliable for difficult-to-access platforms as it manages headers, cookies, captchas, and fingerprinting. It also offers pre-scraped snapshots to give you a jump start.

- Cons: Custom jobs require understanding and operating their proprietary IDE, which might be a barrier to entry for small teams with existing operations and tooling. The data is also often collected in their own defined schema with their pre-built social APIs.

Apify

Apify takes a platform approach towards scraping by focusing mainly on custom pipelines for developers. They offer 'actors' or 'programs' that can be customized to create bespoke scrapers.

- Pros: You get a modular actor that can be forked, customized, and fixed in case something breaks. AI workflows and LLM-ready format, along with MCP servers that can browse social media anonymously.

- Cons: 'Actors' are usually community-maintained and not often enterprise-ready. Hence, they require active maintenance and scrutiny. Also, high-quality residential proxies are needed to avoid platform countermeasures, which might involve adding another vendor.

When does it make sense

Ultra-specific extraction needs (e.g., "scrape every comment from 1,000 specific posts for sentiment analysis") or regulatory environments where data sovereignty trumps time-to-market. For everything else, it's a distraction.

Scraping platforms only shift complexity. Scrapers only handle the sophisticated countermeasures that typically block internal teams, ensuring unrestricted access to the platforms. However, they leave the final interpretation and cleanup entirely to the user. You still need significant effort and expertise to maintain, clean, and polish raw data to make it eligible for analysis or for use in AI applications or agents, similar to what the pipeline model provides.

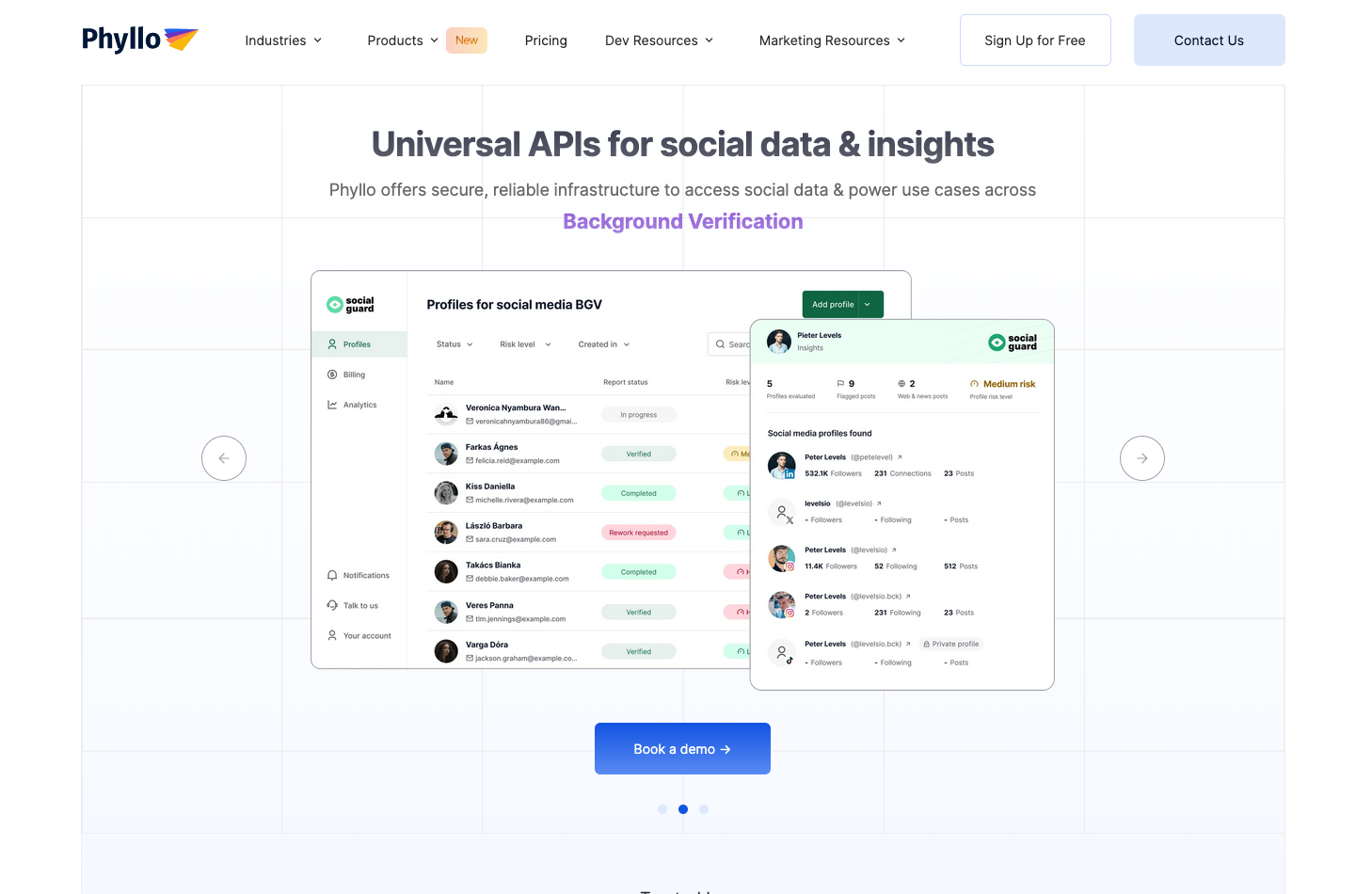

Phyllo API: The consent layer

Philosophy: "We ask permission so you don't have to apologize."

Phyllo allows apps to connect to user social accounts via a unified API. It defines itself as an API gateway that helps developers get access to user-permissioned data across source platforms such as YouTube, TikTok, Instagram, Twitter, Facebook, and more.

Mechanism: The developer embeds Phyllo's widget into their app. The creator is prompted to connect their account. They enter their credentials using a secure flow. Phyllo captures the token and maintains the connection.

Benefit: This approach grants access to private data that is usually invisible to the public web.

The verified advantage

Phyllo provides proof of account ownership. This is critical for brands that need to ensure they are working with the real creator. Users can also fetch monetization data (e.g., YouTube AdSense revenue) directly from the platform, along with authenticated data, such as impressions and reach.

Scope vs. Depth

The biggest limitation of Phyllo is that it requires user action. A developer cannot use Phyllo to search for influencers at scale. This makes Phyllo a post-acquisition tool rather than a discovery tool. It is used to vet and manage creators after they have been found and contacted.

Building on bedrock

The creator economy's data famine isn't ending. Platforms will continue to tighten their gates, AI will make discovery more valuable, and maintenance costs will only rise. In this environment, architectural laziness is fatal.

Choosing your data strategy is no longer a tactical decision; it's vital for success. The pipeline model offers velocity, the walled garden offers stability (for now), the shovel offers control at a steep price of expertise and maintenance, and the consent model offers verity.

Data, today, is a commodity and a moat. The winners aren't those who collect data, but those who interpret it and turn 350M profiles into actionable insights that brands will pay a premium for. Don't ask "Which API is cheapest?" Ask "Which API lets me focus on building what my customers actually value?"

The paradox will persist, but your paralysis doesn't have to.